We are thrilled to announce that the paper titled “eSports Broadcasts with Event Cameras,” authored by Yaping Zhao, Rongzhou Chen, Chutian Wang, and Prof. Edmund Y. Lam, has been accepted for presentation at the ICONIP 2024 conference.

| Scene | Fine Motor Skills |

|---|---|

|

with a traditional camera |

|

|

| with an event camera | |

|

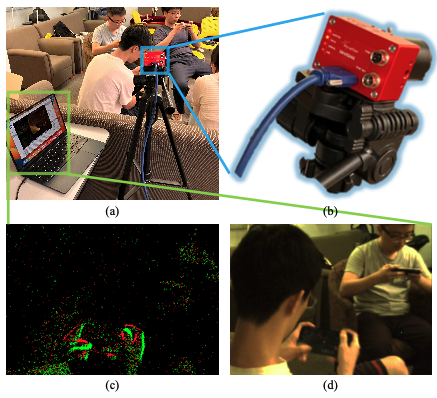

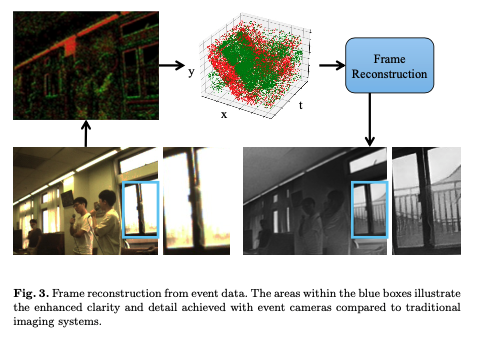

This groundbreaking research, led by Prof. Lam, introduces the use of event cameras in eSports broadcasting, offering a novel solution to the limitations of traditional cameras. Event cameras capture changes in brightness through asynchronous events, providing unparalleled temporal resolution and dynamic range without the motion blur typically associated with fast-paced eSports environments.

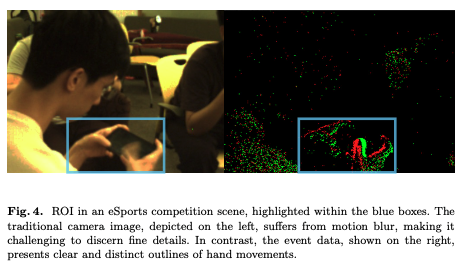

The team, under Prof. Lam’s guidance, developed an efficient algorithm to extract regions of interest (ROI) from event data, pinpointing rapid hand movements and enhancing the broadcast by focusing on these critical actions. This approach not only optimizes directorial decisions but also improves viewer engagement by delivering clearer, more detailed coverage of eSports competitions.

In addition to proposing this innovative application, the research includes a comprehensive dataset collected from eSports competition scenes, comparing the performance of event cameras with standard RGB cameras. The findings demonstrate that event cameras significantly outperform traditional cameras in capturing nuanced motions, setting a new benchmark for broadcast quality in eSports.

This work represents a major advancement in the field of sports broadcasting, promising to elevate the viewing experience and set new standards for the industry. Congratulations to Prof. Lam and the entire team for their remarkable achievement!

For more details, please refer to the full paper on GitHub.

Keywords: Neuromorphic Imaging, Event Camera, Computational Imaging, Video Processing, Dynamic Range, Motion Blur, Sports.